本文介绍R1和K1.5以及MCST方法的主要思路。

原标题:张俊林:MCST树搜索会是复刻OpenAI O1/O3的有效方法吗

文章来源:智猩猩GenAI

内容字数:18671字

DeepSeek R1,Kimi K1.5,and rStar-Math: A Comparative Analysis of Large Language Model Reasoning

This article summarizes the key findings of Zhang Junlin’s analysis of three prominent approaches to enhancing the logical reasoning capabilities of large language models (LLMs): DeepSeek R1,Kimi K1.5,and Microsoft’s rStar-Math. The author highlights the similarities,differences,and potential synergies between these methods,emphasizing the importance of high-quality logical trajectory data.

1. DeepSeek R1 and Kimi K1.5: Similar Approaches,Different Scales

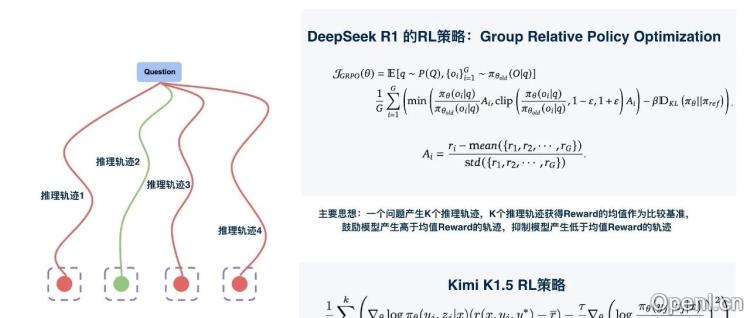

Both DeepSeek R1 and Kimi K1.5 employ a two-stage process: Supervised Fine-tuning (SFT) followed by Reinforcement Learning from Human Feedback (RLHF). Kimi K1.5 can be viewed as a special case of R1. Both methods generate chain-of-thought (COT) data,where the model’s reasoning process is explicitly shown. Crucially,both tolerate errors in intermediate steps of the COT,demonstrating that perfect reasoning in every step is not necessary for achieving strong overall performance. This suggests that LLMs may learn logical connections between fragments of reasoning rather than mastering the entire chain flawlessly,a process potentially more efficient than human reasoning.

2. The Significance of Imperfect Reasoning Trajectories

A key finding is that training data containing intermediate errors in the COT can still yield powerful LLMs. The percentage of errors seems to be more important than the mere presence of errors. High-quality COT data is characterized by a low proportion of erroneous intermediate steps. Multi-stage training,as seen in DeepSeek R1,iteratively refines the quality of the COT data,reducing the error rate in each subsequent stage. This iterative process suggests LLMs might be superior learners of complex reasoning compared to humans.

3. rStar-Math: A Successful MCST Approach

Microsoft’s rStar-Math employs a Monte Carlo Tree Search (MCST) approach combined with a Process Reward Model (PRM). Unlike previous attempts,rStar-Math demonstrates the viability of MCST for LLM reasoning,achieving impressive results with relatively modest computational resources. Its success hinges on a multi-stage training process (similar to curriculum learning) and a refined PRM that incorporates multiple evaluation strategies to improve the accuracy of reward assessment.

4. The Relationship Between R1/K1.5 and MCST

The author argues that the methods used in DeepSeek R1 and Kimi K1.5 are special cases of MCST. They represent random sampling within the search space,while MCST aims for efficient exploration of high-quality paths. By integrating the RL stage of R1 into an effective MCST framework like rStar-Math,a more general and potentially superior method – “MCST++” – can be derived. This combined approach would leverage the search efficiency of MCST with the refinement power of RL.

5. Data Quality as the Primary Bottleneck

The paramount factor in improving LLM reasoning is the acquisition of high-quality COT data. This involves obtaining diverse and challenging problem sets and employing effective methods (like R1’s iterative refinement or MCST) to generate COTs with minimal erroneous intermediate steps. The origin of the data (e.g.,human-generated,model-generated,distilled) is secondary to its quality.

6. A Low-Cost Method for Enhancing LLM Reasoning

The author proposes a low-cost,rapid method for enhancing LLM reasoning capabilities using readily available resources: (1) gather a large set of problems and answers; (2) augment data through problem reformulation; (3) utilize open-source models like DeepSeek R1; (4) generate COT data using R1; (5) optionally,filter low-quality COTs using a robust PRM; (6) fine-tune a base model using a curriculum learning approach; and (7) optionally,incorporate negative examples using DPO. While effective,this method lacks the self-improvement mechanism of iterative models like R1 or MCST++.

联系作者

文章来源:智猩猩GenAI

作者微信:

作者简介:智猩猩旗下账号,专注于生成式人工智能,主要分享技术文章、论文成果与产品信息。

粤公网安备 44011502001135号

粤公网安备 44011502001135号